Technical Documentation Camera

ArUco Marker Detection and Color Tracking using OpenCV and Python

This script utilizes the power of OpenCV (computer vision library) along with Python to detect ArUco markers from a live webcam feed. Additionally, it employs color detection techniques to track blue and yellow objects in the frame. This can be used to track drawn lines on the playing canvas.

Purpose

The primary objective of this script is to:

-

Detect ArUco markers, a type of augmented reality marker, using OpenCV's ArUco module. (Track little endians)

-

Identify and track blue and yellow objects in the webcam feed based on their color properties (HSV range).

Design Choices

OpenCV and Python

OpenCV is chosen for its robust computer vision capabilities and ease of use in Python. Its also free to use, keeping the cost for the project low.

Python's simplicity and extensive libraries make it ideal for rapid prototyping and development in computer vision applications. It also be run on an raspberry pi without many ajustments.

Color Detection

To track blue and yellow objects, the script converts the webcam feed from the RGB color space to the HSV color space. HSV (Hue, Saturation, Value) simplifies color detection by separating color information effectively. Thresholds are set to identify specific ranges of blue and yellow colors within the feed. (Ajust these values/range if the detection is to wide/narrow)

Marker and Color Visualization

Detected ArUco markers are outlined with green lines, and their IDs and orientations are displayed on the frame. Additionally, blue and yellow objects meeting area thresholds are bounded by rectangles and labeled accordingly with their respective colors. The coordinates of these objects are also displayed on the frame, this way can monitor if the program detects the objects correctly.

Functionality Overview

- Webcam Access and Configuration

The script accesses the default webcam and configures its properties such as frame width and height.

- Color Detection

Blue and yellow color ranges in the HSV color space are defined. The script continuously captures frames, converts them to HSV, and applies color masks to identify blue and yellow objects. Detected areas meeting certain size criteria are marked and their coordinates displayed on the frame.

- ArUco Marker Detection

ArUco dictionaries are defined and utilized for marker detection. Detected markers are outlined, labeled with their IDs and orientations, and their centers are highlighted with orientation lines.

- Display and User Interaction

The processed frames are displayed in a window. The script exits upon pressing the 'q' key.

1 2 3 4 5 | |

Running the Script

Make sure you the following on your device:

Python

Ensure Python is installed on your system. You can download and install Python from the official website. Link to python site

OpenCV

Use pip, Python's package manager, to install OpenCV. Open a terminal or command prompt and run:

1 | |

Execution Steps:

Script Initialization

Save the provided Python script in a convenient location on your system.

Setting up the Webcam

- Connect a webcam to your computer.

- Ensure the webcam is functioning correctly and properly positioned.

Running the Script

- Open a terminal or command prompt.

- Navigate to the directory where the script is saved using the cd command.

Execute the Script

- Run the script by typing:

1python arucoDetectionColorCoordinates.py

Interacting with the Application - Once the script starts running, a window displaying the webcam feed will appear. - Place blue and yellow objects within the webcam's view. - ArUco markers, if detected, will be outlined with their IDs and orientations displayed. - Blue and yellow objects meeting area criteria will be highlighted and their coordinates shown on the frame.

Exiting the Application

- To exit the application, press the 'q' key on your keyboard. This will close the window displaying the webcam feed.

The code explained

This documentation outlines the functionality and usage of the Color Detection and ArUco Marker Recognition system implemented in Python using the OpenCV library. The system is designed to detect and visualize various shades of red and white in a live camera feed, alongside the recognition of ArUco markers.

Color Detection

- Red Color Detection:

The system detects red color in the frame using HSV color space. The red color range is defined in terms of lower and upper bounds.

1 2 | |

The system then applies the red color mask to the frame and finds contours to identify red areas.

1 2 | |

ArUco Marker Recognition

The system uses ArUco marker recognition to identify markers in the frame. ArUco dictionary and parameters are defined, and the detectMarkers function is used to find marker corners and IDs.

1 2 3 4 | |

If markers are detected, the system utilizes the aruco_display function to draw lines, calculate marker centers, orientation angles, and display relevant information on the frame.

1 2 | |

Empty spot detection

Introduction

This documentation outlines the functionality and usage of the Empty Spot Detection system implemented in Python using the OpenCV library. The system is designed to identify various shades of white in a live camera feed, with a specific focus on detecting empty spots within the captured frame.

Functionality

System Setup

The system initializes the camera, configuring it to a Full HD resolution (1920x1080). Users may need to adjust the camera index based on their setup.

1 2 3 | |

Image Processing

- Color Space Conversion: Frames are converted to the HSV color space to facilitate better detection of white shades.

1 | |

1 2 3 | |

1 2 3 | |

Contour Detection

Contours are identified in the processed image using OpenCV's contour-finding functions.

1 | |

Visualization

Contours are drawn around different shades of white, bounding rectangles are outlined, and centroids are marked on the frame.

1 2 3 | |

Display

The processed frame, highlighting different shades of white and displaying contours and centroids, is shown in a window.

1 | |

Termination

The system can be terminated by pressing 'q'.

1 2 | |

Usage

- Ensure that the OpenCV library is installed (pip install opencv-python).

- Adjust the camera index in the script (usually 0 for the default camera).

- Run the script to observe the live feed with highlighted empty spots.

Adjustable Parameters

- Camera Resolution: Modify the CAP_PROP_FRAME_WIDTH and CAP_PROP_FRAME_HEIGHT values for the desired resolution.

- HSV Thresholds: Adjust lower_white and upper_white values to encompass the range of shades representing empty spots. Fine-tune based on environmental conditions and lighting.

Angle Calculations

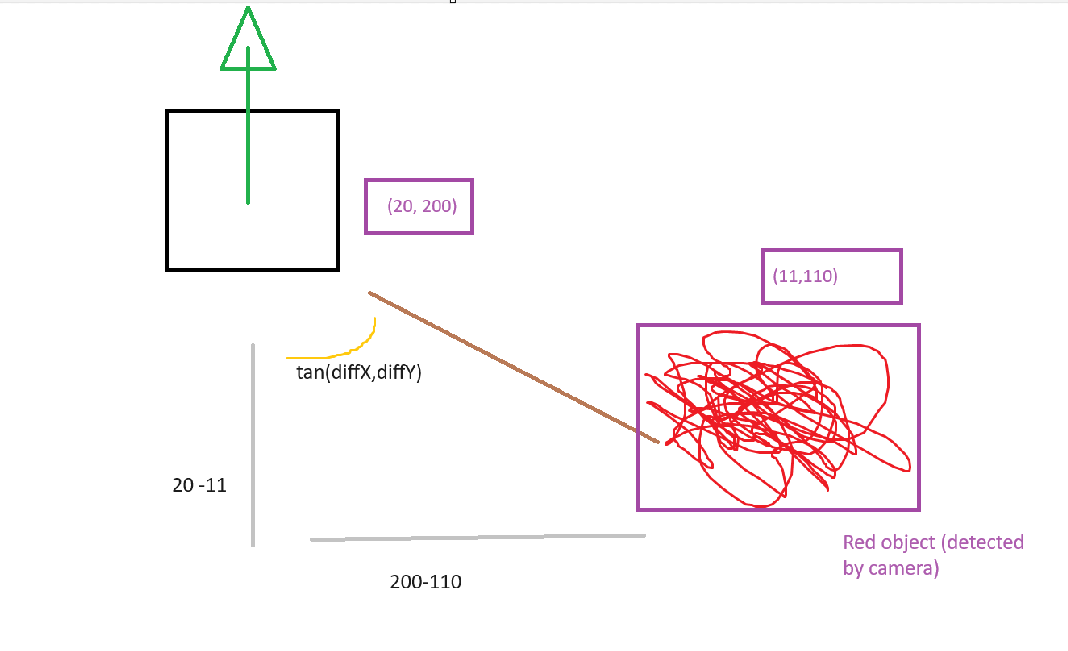

measuring the angle between two moving objects is fairly simple, it boils down to just using the proper sin-cos-tan in your calculation.

things are easier with a visualiser, so here's a drawing explaining the whole thing.

As you can see we have two coords, that of the the square, in this case representing an aruco marker and that of the red scribble, representing a red scribble. Using those two coordinates we can calculate tan using this simple function.

1 2 3 4 5 6 | |

This, however is not enough for our project as we want the aruco marker to face the object. So this is how the function ultimately is implemented.

Once a red object is detected it gets thrown in to an array.

1 2 3 4 5 6 7 | |

This data then gets used once an aruco marker is detected.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |